Photogrammetry with large-frame sensors

For professional photogrammetrists, the objective of mapping is not just to generate simple 2D orthomosaics and visually-pleasing 3D models, but to create cartography that provides accurate location and precise measurements.

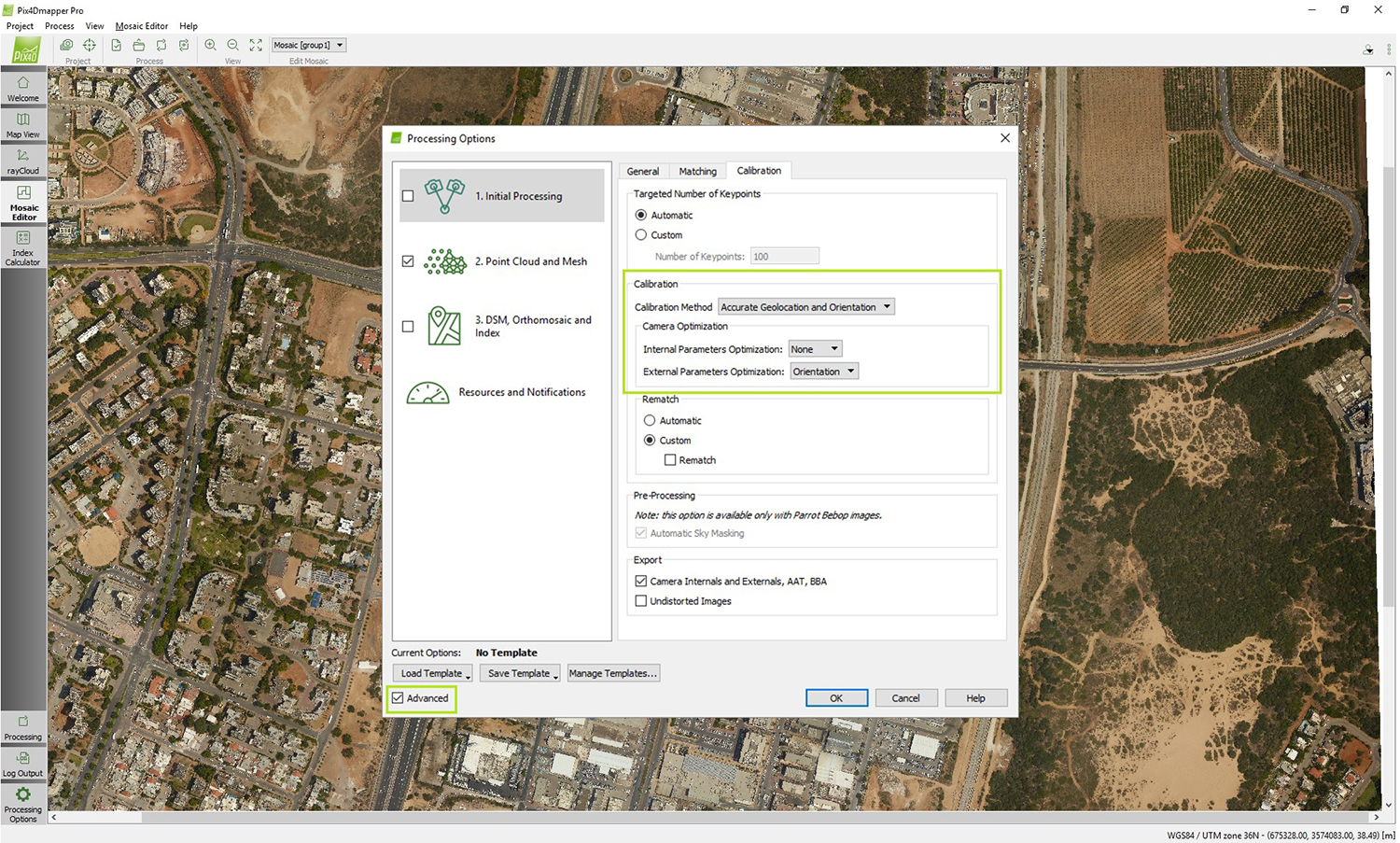

In Pix4Dmapper photogrammetry software, users can process large-frame images with an additional add-on. This add-on is for processing images larger than 55 megapixels. Metric camera users, UltraCam, for example, can enter pre-calibrated camera interior and exterior parameters in Pix4Dmapper.

By choosing either to fix or to recompute parameters, it is more flexible and efficient to generate accurate cartography in a short time. Here are some useful features for professional photogrammetrists:

To fix selective pre-calibrated camera interiors

Pix4Dmapper supports the input of pre-calibrated camera interiors, such as focal length, principal point of autocollimation (PPA), and lens distortion coefficients, etc. The feature of choosing whether to fix or to recompute them is especially important for metric cameras whose interior parameters are pre-calibrated in labs. Those values should have more weight and not to be recomputed every time based on the image content.

To fix selective camera exteriors

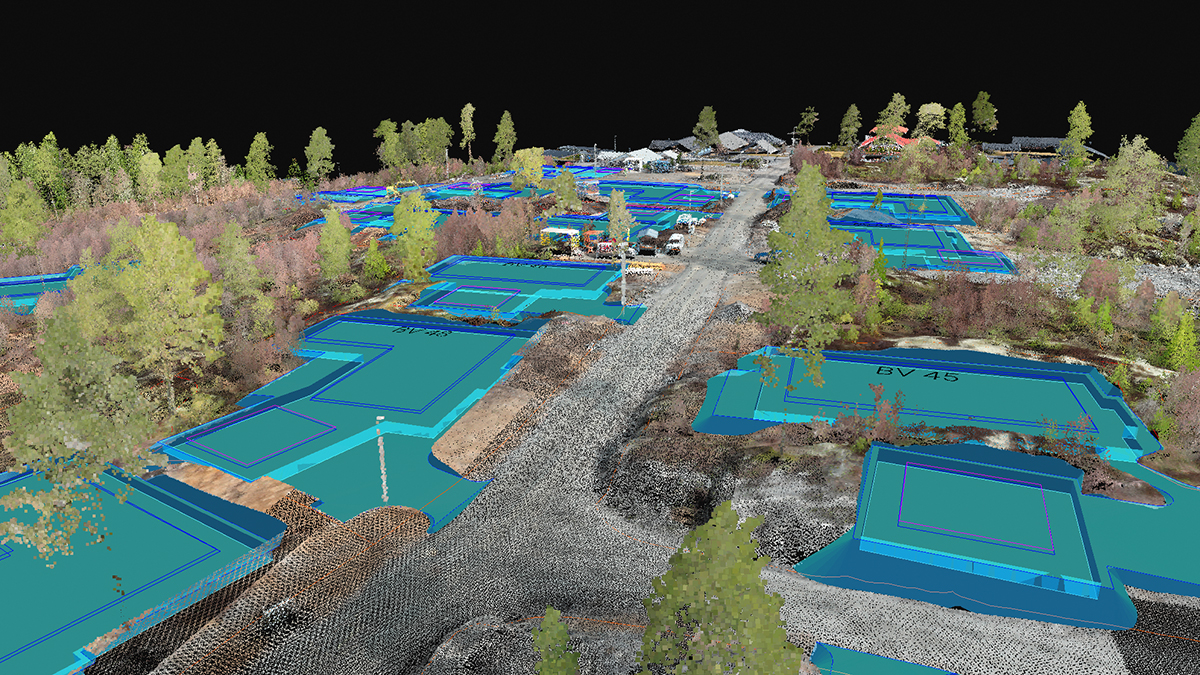

One recent method for traditional photogrammetrists to avoid surveying ground control points for every project is direct georeferencing, giving the six accurate exterior orientations (x,y,z,omega,phi,kappa) to produce the surface model and orthomosaic.

Direct georeferencing requires a one-time pre-project flight over the calibration field which will give us the shift in x, y, z direction from the GPS receiver and the rotation angles around x, y, z axes from the inertial measurement unit (IMU). These values will not change unless the camera is re-installed and so is the relative position and orientation. The entire flight path can be computed by interpolating the mobile station locations received from the GPS receiver using higher-frequency IMU, and adjusted based on known base stations. Finally, locating the image triggering time along the entire path, based on GPS time, gives the location and orientation of each image.