Automatic point cloud classification for construction

At Pix4D, like the early days of 3D laser scanners, we are pioneering next generation

We started by using drone imagery and machine vision photogrammetry techniques to revolutionize the construction surveying industry. The quality/cost/benefit of drone-based surveys has taken the construction industry by storm. And recently, we have been extending beyond our machine vision techniques for photogrammetry and delved into machine-learning processes to deliver point cloud classification of drone-based point clouds, a major step forward for automatic data recognition and reconstruction for the industry.

Why do we need to classify point clouds?

Automatic classification places the points into groups with very useful and logical categories, such as points on a road surface, building roofs, trees, etc. Without this capability, users must spend hours of tedious work trying to isolate the data of interest.

The limits of traditional terrestrial surveying methods

Until today, only airborne LiDAR systems/software had this very valuable feature of being able to automatically classify the points into groups. In fact, airborne systems use sensing technologies that allow them to do more analysis of the returned laser energy.

Smart algorithms differentiate the points and build groupings. With the possibility of informing the system that you flew over a forest or over an area with buildings with sloped roofs or flat roofs, the algorithms have more information to use to analyze and classify the points.

However, terrestrial laser scanners use different sorts of sensors that do not deliver the additional information needed to easily allow point cloud analysis and classification. While the LAS point cloud format, traditionally used by airborne LiDAR systems is capable of supporting classified point clouds, simply saving terrestrial LiDAR point clouds into the LAS format does not deliver the classified point clouds users want.

Of course, we have seen some delivery of point cloud classification begin to emerge in the industry. In some cases, they can do a reasonable job of finding the ground –one of the most common requirements. Or, they try to step around the problem of classifying the entire cloud and just look for specific shapes –like cylinders– to help try and find pipes.

In other cases, the user can pick a single point or group of points at a time, again, trying to fit some specific shape of a pipe or steel element or planar surface. But no one, to date, has solved the total problem of classifying the entire cloud into individual groupings.

Pix4D sees point clouds differently

While Pix4D is known mostly for photogrammetry from drone-captured imagery, our processing delivers more than precise 2D orthomosaics, and 3D mesh/models. There is also the possibility to generate very dense and precise point clouds, and of course, combine LIDAR with photogrammetry.

Those familiar with terrestrial laser point clouds will find one interesting difference with point clouds generated via photogrammetry: the terrestrial laser scanning process requires an extra step and extra time to provide true-color point clouds. It requires running the laser scanner’s internal camera or even an external camera in the field and adding in the necessary processing time to add the true color to the point cloud.

Thus, we are all usually expecting to see point clouds with the false color blue-red intensity spectrum (mostly orange) or a gray-scale representation of that same intensity range. Intensity coloring can have some advantages, but true color is always understandable. Photogrammetry-generated point clouds derived from images are always presented in true color rendering, with no extra cost in the capture equipment, nor time in the field and office.

At Pix4D, we have now leveraged machine-learning technology to help the system “learn” how to classify point clouds. First, we created generalized algorithms to segment the point cloud into regional clusters. Then, in our learning lab, we ran hundreds of datasets and manually informed the machine learning system what each cluster represented.

We are also using all of the advantages of imagery-based, machine vision techniques to maximize the algorithm’s ability to quickly, robustly, and repeatedly classify the point cloud data derived from our photogrammetry engine. Through this process, we helped the system “learn” to identify buildings, trees, hard ground surface, rough ground and human-made objects.

Finally, we “baked-in” this learned behavior to our shipping software. Now, literally at the click of a button, Pix4D classifies the densified point clouds into these predetermined classes, automatically.

Every individual point in the 3D point cloud generated by Pix4D software is derived from many overlapping images that see this point: typically 20 or more images. Uniquely, for a Pix4D point cloud, every image that is used to calculate each point is referenced to that point. We call this association our rayCloud™. This combination of point cloud geometry and multiple images allows the machine learning to have extra information when working with our data.

How do we apply automatic point cloud classification to construction sites?

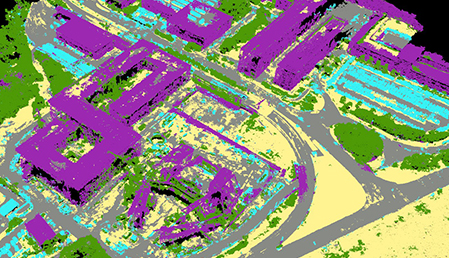

As mentioned previously, the first implementation of our machine-learning driven process automatically classifies entire point cloud points into the 5 pre-defined groups: ground, road surface, high vegetation, building and human-made object.

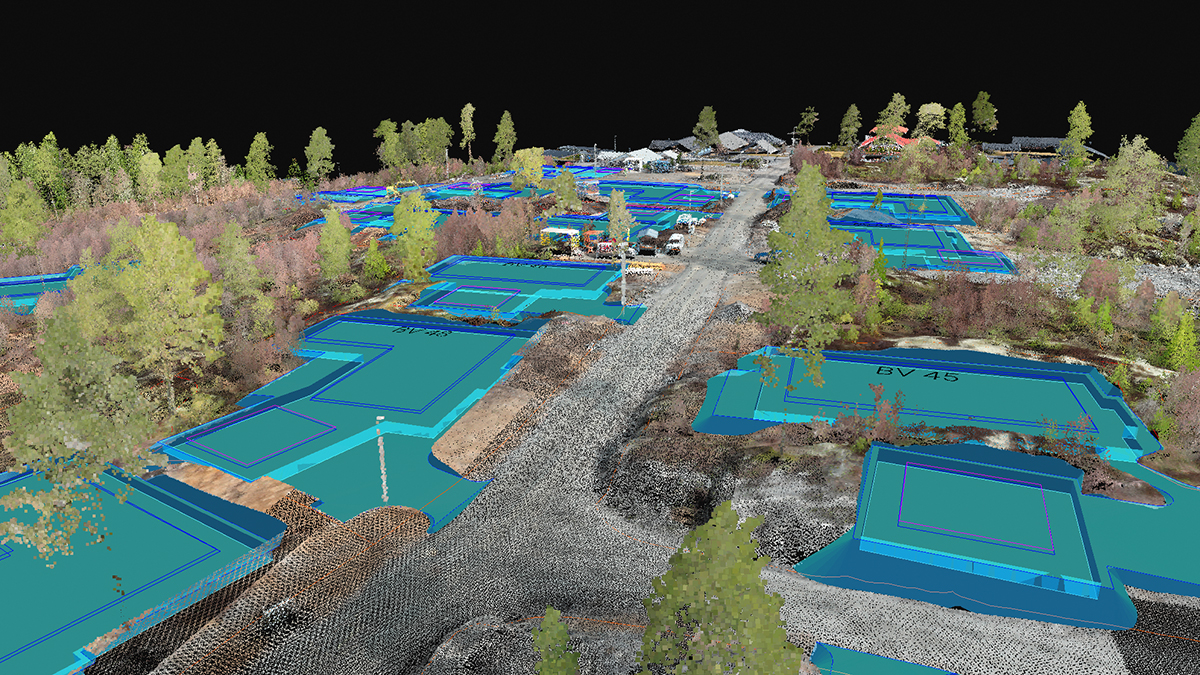

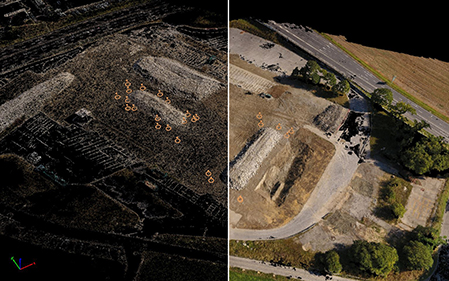

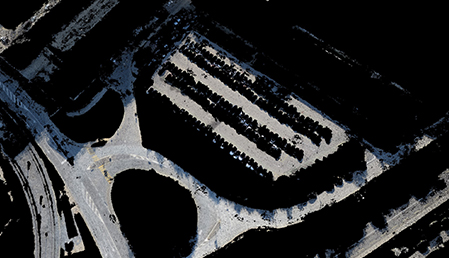

Let’s use the example of a construction project captured with a drone and processed with Pix4D. This is a zoomed out view of the whole project area, showing the point cloud as colorized with the true color from the project images.

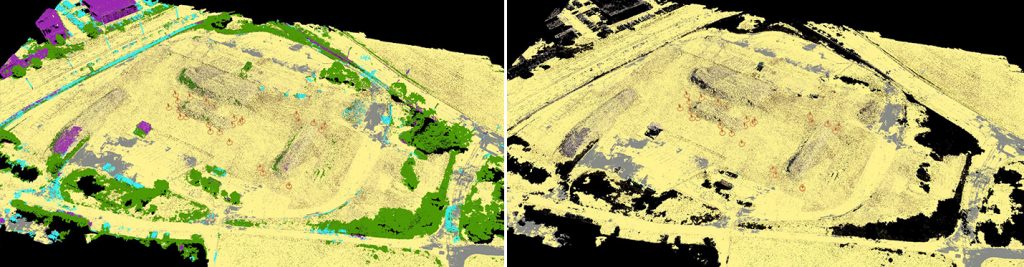

We have now changed the colorizing to show the different classifications. You can immediately see that the automatic segmentation is precise.

We can distinguish the buildings in purple, the ground in yellow, the road surface as grey, trees in green and human-made objects in cyan.

1. Building (larger rectilinear structures)

Focusing on a section of the project, we have isolated just the “building” class. Clipping and cleaning the point clouds manually could take literally hours of tedious hand work while the Pix4D classification algorithms deliver this segmentation automatically.

2. Road surface (roads, hardpan/mostly flat surfaces including sidewalks, grass fields, playgrounds…)

Zooming into another area of the project, we have enabled the road surface class only. This includes the road, parking lots and sidewalks. The cars, the buildings, trees are all gone.

3. Ground (non-flat surfaces and low vegetation)

By adding the ground surface, you can see the green lawns and dirt areas all added back in now, but the trees and cars and buildings are all still excluded.

4. High vegetation (trees, shrubs…)

You can also isolate just the high vegetation.

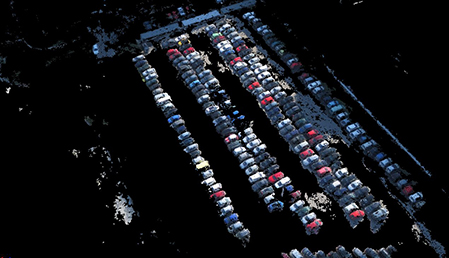

5. Human-made object (cars, small structures, light poles, signs, site equipment, machines)

And finally, by turning on the human-made objects we can see the cars have been added back in. Removing those elements quickly will also help to improve survey accuracy.

To be more explicit, we have used an earthwork project. In just a few clicks, you can now easily and quickly improve the accuracy of your stockpile or cut and fill calculations by automatically removing the ground, the small jobsite buildings, the human-made objects and the high vegetation that could distort results.

Leverage point cloud data from drones in your construction workflow

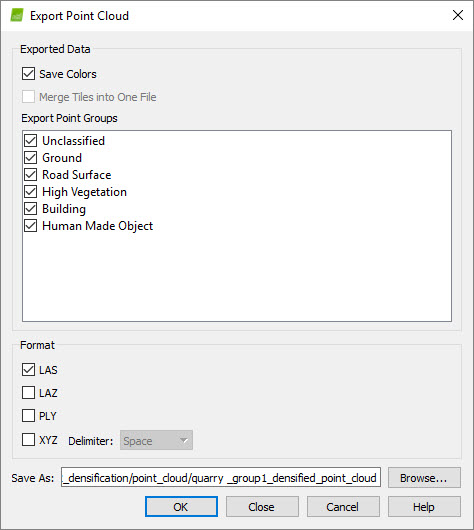

In addition to the automatic classification process, you can also manually edit the point group classifications by adding or removing selected points, adding new groups, renaming or deleting, all the functions you would expect.

You can also easily integrate Pix4D data and point clouds into other existing workflows with terrestrial laser scanner software solutions from the scanner vendors or from CAD/BIM system providers such as Autodesk, Bentley who all now support point cloud workflows. Most importantly, you can export the entire cloud as a LAS file and that file will retain the classifications, so any software that can recognize these classifications can recognize the point groups.

You can selectively export one or more groups to retain these classification groups in other software programs that don’t honor/recognize the LAS group formatting. Now that we can automatically deliver classified sets of points in useful logical groups, the value of the original time-saving process has been enhanced, exponentially.

Drones + Pix4D = time and cost savings on construction surveys

Drones and photogrammetry alone can deliver that exponential 10x type of improvement on cost and speed over traditional terrestrial laser scanning that we would expect from a next-generation technology implementation. Consider what it costs in terms of hardware, software and manpower time to gear up and accomplish a 10-acre building campus survey using a terrestrial laser scanner process compared to drone/photogrammetry process. Adding reliable automatic point cloud classification, photogrammetry-based surveying for construction just took another big leap ahead.